Hidden Threats to Publishers, Ad Networks, SaaS, and E-Commerce

WAF360 Team

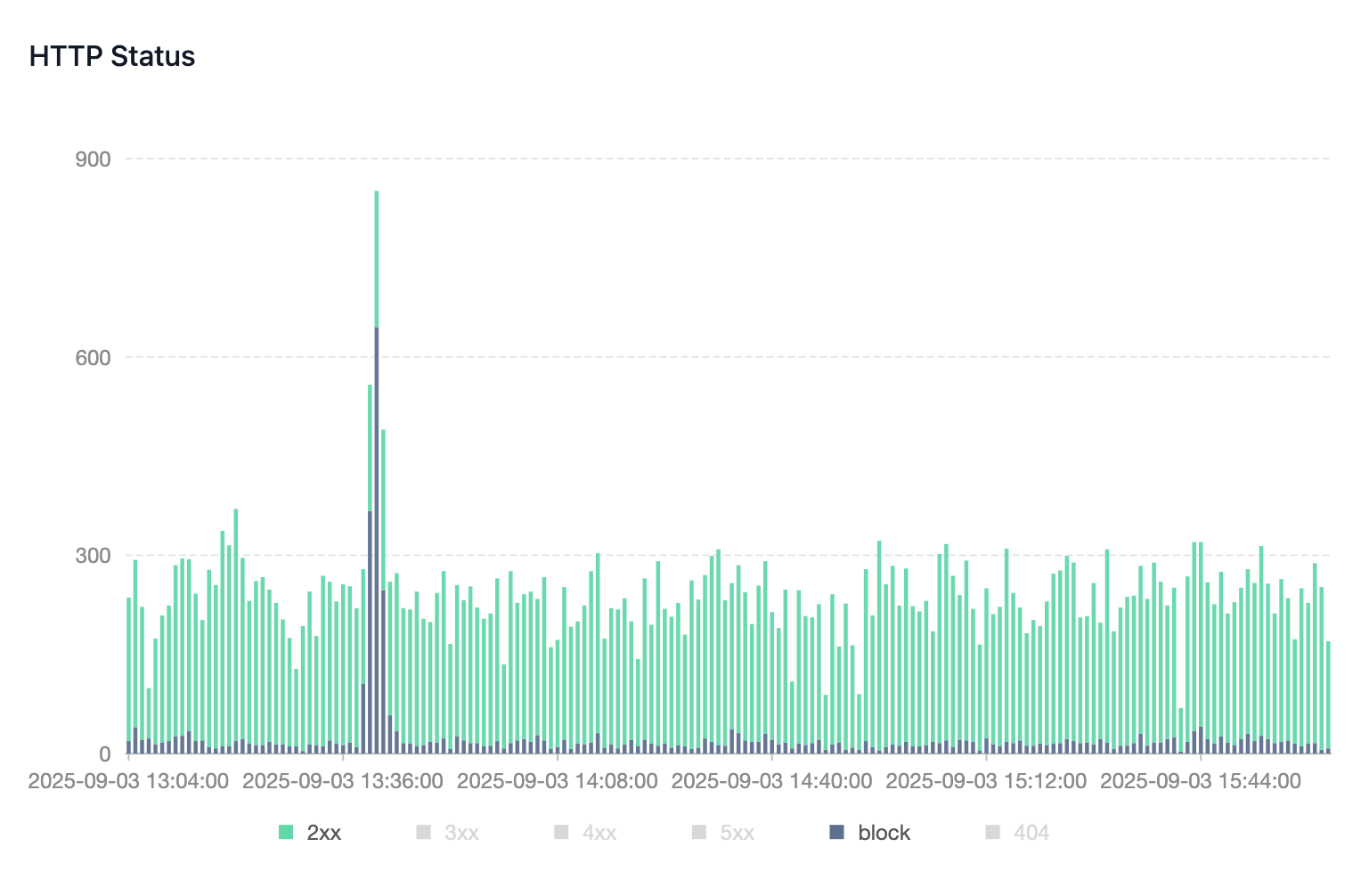

When most people think about website traffic problems, scrapers usually come to mind. While scrapers are indeed a major challenge, they are far from the only threat publishers, ad networks, SaaS platforms, and e-commerce sites face. On the open web, anyone can send requests to your website, and without proper traffic management, these requests can cause a range of issues.

Uncontrolled traffic can drive up server and CDN costs, slow down your website, or even trigger downtime, all of which directly affect revenue. Third-party vendors that charge per pageview or traffic usage can further increase expenses. Invalid traffic (IVT) reduces the effectiveness of your ad inventory, and media buyers end up spending on impressions that provide no real value.

Beyond scrapers, there are several other types of traffic that can silently impact your site’s performance and revenue. Understanding and addressing these threats is crucial for maintaining a reliable and efficient online presence.

1. 404 Attacks

Some attackers deliberately target 404 pages, sending traffic to pages that don’t exist. Because these requests bypass caching layers and hit your application servers directly, they create unnecessary load and cost. To stay protected, you need visibility into abnormal 404 patterns so you can filter them before they impact performance.

2. Login Attacks

Attackers often attempt to brute-force login forms with common usernames and passwords. Besides putting user accounts at risk, such attempts also create server strain. By monitoring login traffic and identifying suspicious login behavior, you can prevent these attacks while ensuring normal user access remains unaffected.

3. Crawlers from Low-Cost Data Centers

Certain low-cost hosting providers, such as Hetzner, DigitalOcean, Alibaba Cloud (AliCloud), and OVH, are frequently used to run automated spiders. Since operating bots on these platforms is inexpensive, they are a common source of unwanted crawling traffic. Recognizing and filtering requests from these environments helps maintain your site’s bandwidth efficiency and server stability.

4. Programmatic Access via Application User Agents

Bots often disguise themselves using application-specific user agents, such as: okhttp, curl, Python-urllib, Go-http-client, and node-fetch. These automated requests inflate server load and distort your analytics or ad reporting. Identifying such user-agent strings and enforcing rules against them allows you to cut out invalid programmatic traffic.

5. Surging Traffic from Countries Without Users or Audiences

Unexplained traffic spikes from regions where you have no business presence or audiences often signal invalid or fraudulent traffic. By analyzing geo-based traffic flows and filtering out irrelevant regions, you can safeguard your reporting accuracy and reduce waste.

How WAF360 Helps

WAF360 provides comprehensive data attributes and intelligent rules to help you identify and block unwanted traffic.

WAF360 helps you identify and block unwanted traffic.

WAF360 helps you identify and block unwanted traffic.

With better visibility and control, you can reduce server and CDN costs, protect ad inventory quality, ensure accurate analytics and reporting, and enhance user experience as well as website performance.

Traffic management goes beyond stopping scrapers. It focuses on protecting your entire digital ecosystem. With WAF360, you gain visibility and control over every request hitting your website, ensuring your resources are used efficiently and effectively.

Unleash the true power of your digital presence with our state-of-the-art solutions. Elevate your online experience and command attention in the digital landscape. Our cutting-edge technologies are meticulously designed to propel your brand forward, ensuring not just a presence, but a commanding and impactful visibility. Explore limitless possibilities and carve your path to digital success with our innovative solutions, tailored to empower and amplify your online journey.

Contact Us